Following from my previous post, AI-generated images with Stable Diffusion on an M1 mac: This time, using the image-to-image script, which takes an input “seed” image, in addition to the text prompt as inputs. In this case the model will use the shapes and colors in the input image as a base for the output AI-generated image.

Running Stable Diffusion img2img

Running img2img.py from Ben Firshman’s bfirsh/apple-silicon-mps-support repository will return an error from ldm.util import instantiate_from_config ModuleNotFoundError: No module named 'ldm'

To fix this, probably because I’m running img2img.py from the stable-diffusion home folder, edit img2img.py and add sys.path.append() before ldm is imported, as below:

from contextlib import contextmanager, nullcontext

sys.path.append(os.path.join(os.path.dirname(__file__), ".."))

from ldm.util import instantiate_from_configI created an inputs folder, where I placed my roough input sketch in PNG format (you can’t use my image directly, which is a JPG copy):

python scripts/img2img.py --n_samples 1 --init-img inputs/input.png \

--prompt "a very realistic spaceman on the moon with the earth and a spaceship in the background"--plms does not work, as the code contains raise NotImplementedError("PLMS sampler not (yet) supported").

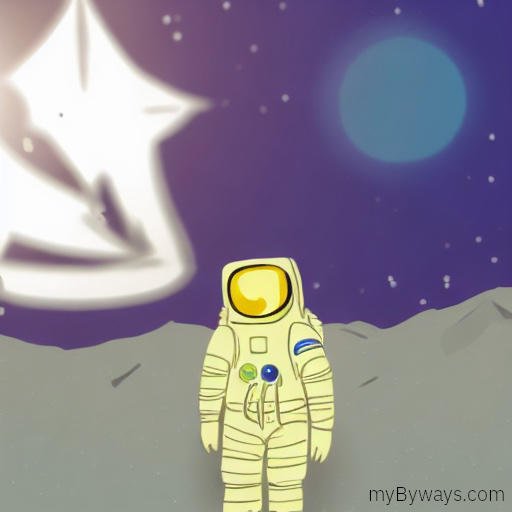

And here is the output:

Very nice! I fed the output in as input and repeted this process two more times, netting me this image:

Pretty cool.

Aside: I also tried to use inpaint.py but the version included does not seem to be working as expected. Have a feeling it’s left over from latent diffusion and not specifically to do with stable diffusion, since is not input text prompt. Anyway....

For more examples of Stable Diffusion output including img2img and in-painting, head over to /r/StableDiffusion on Reddit. Crazy stuff.